What is generative AI?

Generative AI is a type of artificial intelligence that can provide natural-language answers to user input – or, generate high-quality images based on your keywords.

For example, if you ask a generative AI system what you should make for dinner, it might respond with a popular recipe. Ask for an image of a cat sitting on a chair and you might get a selection of artistic renditions in different styles.

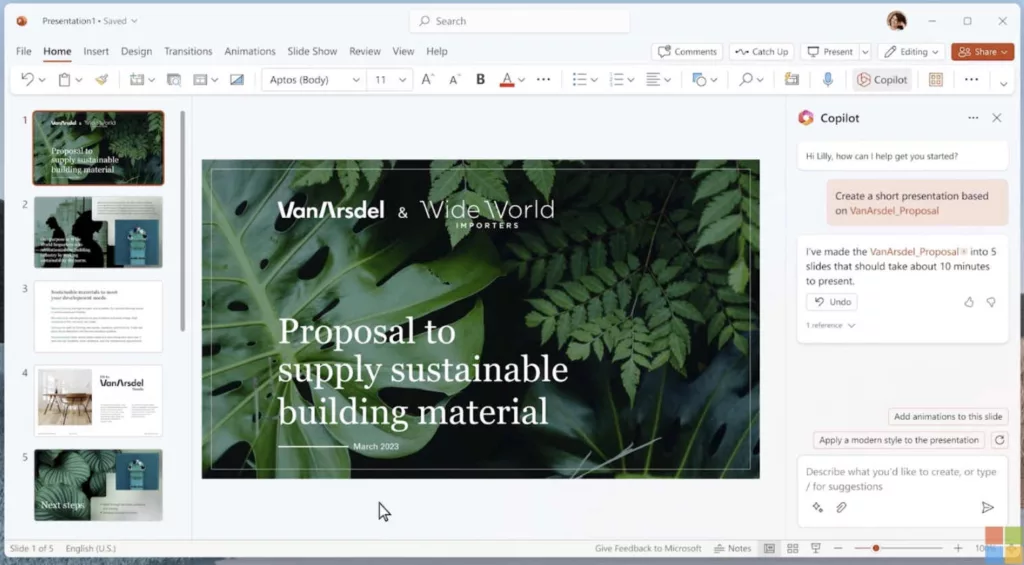

The most famous generative AI of all is ChatGPT, but it already has a lot of company. Google has Bard, for instance, while Microsoft has introduced a whole family of generative AI products under the Copilot brand. Including Windows Copilot and Microsoft 365 Copilot.

Nor do you need to look far for generative AI art examples. We provide separate guides to getting the best AI art from Midjourney and from Adobe Firefly in Photoshop.

Table of contents:

- How does generative AI work?

- Are LLMs the same as AI?

- What’s the difference between generative AI and discriminative AI?

- What are the applications of generative AI?

- What are some typical generative AI use cases?

- How do search engines use generative AI?

- How else can I access generative AI?

- What are the downsides of AI?

- Does generative AI breach copyright?

- Is generative AI a security risk?

How does generative AI work?

Generative AI models are “trained” by ingesting very large, wide-ranging databases of written content or images. For example, the GPT-4 language model is based on text from public websites, academic papers, legal documents, works of fiction and numerous other sources.

Analysing these resources allows the program to build up a deep, complex understanding of how words and sentences fit together. There may also be an element of human training, where testers steer the AI away from invalid constructions.

The result is an engine that can mix and match previously ingested elements to assemble new sentences and paragraphs on a specific theme.

Are LLMs the same as AI?

An LLM is a specific type of generative AI. The name stands for “large language model”, and, as that implies, it refers to AI systems that are focused on natural-language interactions.

While AI image generators work on a similar principle, they operate in a slightly different way, drawing on elements of other images they have analysed to create new, visually coherent images to fit a requested brief.

What’s the difference between generative AI and discriminative AI?

These are the two major types of artificial intelligence model. LLMs and image generators are both examples of generative AI – that is, AI systems that use what they have learnt in training to build responses to user input.

Discriminative AI, by contrast, uses its training data to make decisions about the user input. For example, discriminative AI is used in speech recognition systems, to identify the words that someone is saying by comparing their speech to the sounds that the model has been trained on.

What are the applications of generative AI?

Simple generative AI tools have long been used to create natural-language descriptions of data such as weather forecasts or stock reports. In recent years, more advanced LLMs have gained the ability to automatically generate text on any given subject, from marketing copy to speeches, presentations and even legal documents.

They can also process user input, generating summaries of longer texts or extracting key points from customer feedback.

Engines that have been trained on computer code can generate new code on demand – although some human review is often required to ensure the code works as intended.

What are some typical generative AI use cases?

Any organisation that needs to generate text or images, such as those in the media and marketing industries, can make use of generative AI tools. LLMs can also power user-facing chatbots, for purposes such as providing sales advice and customer support in friendly natural language.

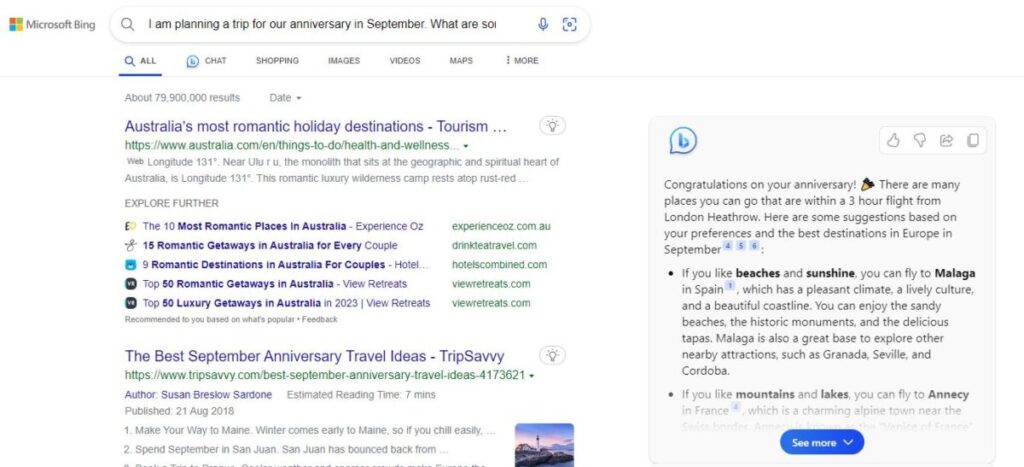

For individuals, web search engines can use generative AI to provide an interactive, conversational interface to online content. We have already seen this with Microsoft’s AI-powered Bing.

How do search engines use generative AI?

Google recently launched its AI-powered “Google search generative experience”, or Google SGE. This adds AI-generated explanations and context at the top of search results, to help users better understand the results they’re seeing.

Microsoft’s Bing goes further, incorporating an AI chat feature that lets you ask questions in plain English, and provides natural-language answers based on the information in its web catalogue. You can follow up with additional clarifications or requests, to achieve an AI-based search that seeks out exactly the information you want.

Google also offers its own AI-powered chat service called Bard; this isn’t designed to directly fetch web content in the way that Bing is, but it can still answer a huge range of questions, thanks to the massive body of information that it’s been trained on.

How else can I access generative AI?

One of the best-known generative AI models is OpenAI’s ChatGPT service. This provides a simple chatbot interface, allowing you to ask any questions you like, or set tasks such as writing a poem or creating a web page.

You can sign up for free to use the popular GPT-3.5 LLM, while premium subscribers paying $20 per month get access to the more advanced GPT-4. There’s also API pricing available for businesses wanting to include GPT-4 functions in their own applications.

We explore whether businesses should choose ChatGPT Enterprise or ChatGPT Plus in a separate article.

What are the downsides of AI?

As generative AI gains popularity, several potential downsides have become apparent:

- AI-generated text requires independent fact-checking. This is because LLMs are susceptible to “hallucination”, where the model generates authoritative-sounding statements about people that never existed or events that never happened.

- Generative AI is likely to put people out of work, as computers take over tasks from professional writers and artists.

- As AI-generated content becomes more prevalent, future AIs will increasingly be trained on content that was itself generated by an AI. This could lead to a drop in accuracy and overall quality, as well as a loss of originality and creativity.

- The computers that run the AI models consume a huge amount of energy, raising concerns about the carbon footprint and overall environmental costs of a growing shift to AI.

Does generative AI breach copyright?

In September 2023 a group of authors including John Grisham and George R.R. Martin launched a lawsuit against OpenAI, claiming that their works had been unlawfully included in the training data used to create the GPT-3.5 and GTP-4 LLMs.

Separately, web hosts and online news providers have sued the company claiming that it “scraped” content from their websites for training without permission.

It remains to be seen how these cases will be resolved, and what implications this will have for the future of generative AI.

Is generative AI a security risk?

LLMs may learn not only from their initial training data, but also from their ongoing interactions with users. This raises concerns for confidentiality: in April 2023, it was discovered that Samsung staff had been using ChatGPT to help with meeting notes and code development, with the result that commercially sensitive information was added to the LLM database.

To avoid further leaks, Samsung has banned staff from using generative AI tools in this way.

Any individual or company considering using an AI tool to process their data should consider the sensitivity of the information they’re sharing, and carefully examine the operator’s privacy policy.

Summary

- Generative AI can produce rich, detailed answers to questions in plain English, or produce other results such as images or computer code.

- The output of a generative AI model is constructed from the data ingested during its initial training.

- Because of the way the models work, the accuracy of AI-generated text cannot be guaranteed.

- The rise of AI systems raises issues about their use of training material, and information subsequently provided by users.

NEXT UP

Ryan Beal, CEO & Co-Founder of SentientSports: “Sports generate some of the richest datasets globally”

We interview Ryan Beal, CEO & Co-Founder of SentientSports, a startup using AI in fan engagement and athlete protection on social media.

Paris 2024: The greenest games ever

How the Paris 2024 Olympic Gamers organisers have lived up to their promise that this is the greenest Olympics ever

Salesforce, Workday team up to launch AI employee service agent

Salesforce and Workday have marked their new strategic partnership with the launch of an AI-powered assistant to handle employee queries.