Adobe announces CR pin for AI images

Spot the real from the deepfake with a new “icon of transparency” that identifies AI-generated images and videos. Richard Trenholm explains how the Content Credentials icon (the CR pin) will work.

Generative AI has thrown open creativity to anyone and everyone, to the point where it’s impossible to know whether images are “real”. Now Adobe, Microsoft and a host of other companies are addressing some of those complaints using a simple symbol designed to tackle deceptive photos and deepfake videos.

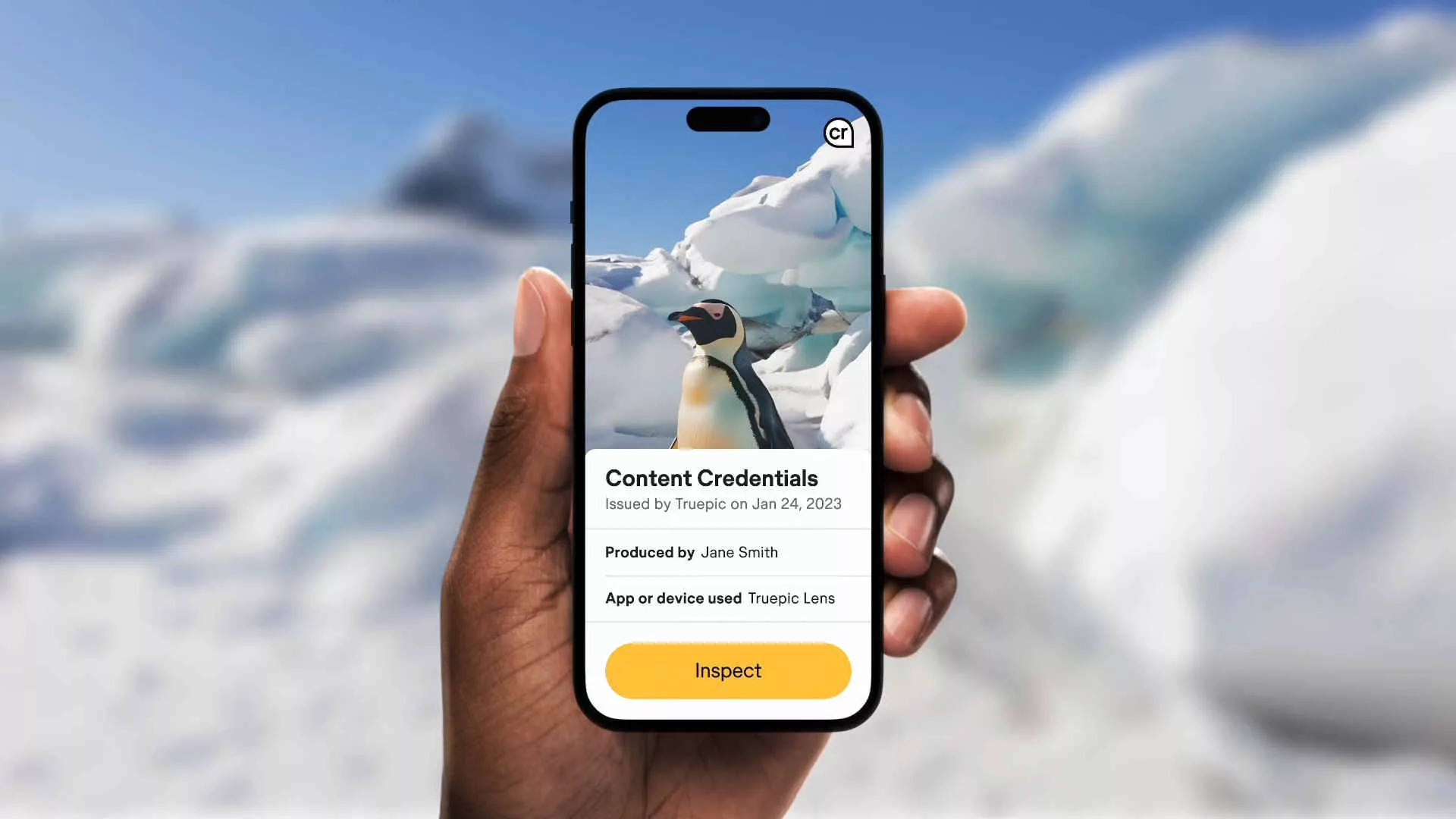

The new symbol displays the letters “CR” on imagery and videos made with AI. Described as an “icon of transparency” or “digital nutrition label”, it’s essentially a watermark identifying images and video created with AI tools.

The CR icon (which slightly awkwardly stands for “Content Credentials”) can be added to the image itself, so viewers can hover over it to see information about its creation. That includes which AI tools were used, and whether the image has been edited.

Even if the creator chooses not to display the symbol, the information will still be permanently encoded into the image or video’s metadata. Websites or apps could read that metadata and display the icon anyway, even if the creator opted not to show it.

Industry backing for CR pin

The open source icon is the brainchild of the Coalition for Content Provenance and Authenticity (C2PA), a group of companies including tech companies Adobe, Arm and Intel; photographic firms Nikon, Leica and Sony; the BBC; and marketing giant Publicis.

The symbol isn’t quite an industry standard yet, as Google has a rival tag called SynthID. But the Content Credentials pin will be used in software such as Photoshop, Premiere and Adobe’s AI system Firefly.

Microsoft, meanwhile, will soon phase out its own AI watermark and use the new symbol to tag AI content made with Bing Image Generator.

What the CR pin tells you

The CR pin also identifies the creator of an image, although it doesn’t address the accusation from (human) artists that AI image creators such as Dall-E, Midjourney and Firefly plagiarise their artwork — a concern which could lead to copyright headaches for companies using AI-created imagery.

But it does address the issue of transparency, as AI-generated images and deepfake videos become indistinguishable from the real thing. Tagging AI content is intended to highlight AI-imagined fakery, so viewers can trust the images and videos they see online.

“The importance of trust in that content, specifically where it came from, how it was made, and edited, is critically important,” said Jem Ripley, CEO of marketing company and C2PA member Publicis Digital Experience.

“Of equal importance is ensuring our clients’ brand safety against the risks of synthetic content, and fairly and appropriately crediting creators for their work.”

NEXT UP

Ryan Beal, CEO & Co-Founder of SentientSports: “Sports generate some of the richest datasets globally”

We interview Ryan Beal, CEO & Co-Founder of SentientSports, a startup using AI in fan engagement and athlete protection on social media.

Paris 2024: The greenest games ever

How the Paris 2024 Olympic Gamers organisers have lived up to their promise that this is the greenest Olympics ever

Salesforce, Workday team up to launch AI employee service agent

Salesforce and Workday have marked their new strategic partnership with the launch of an AI-powered assistant to handle employee queries.