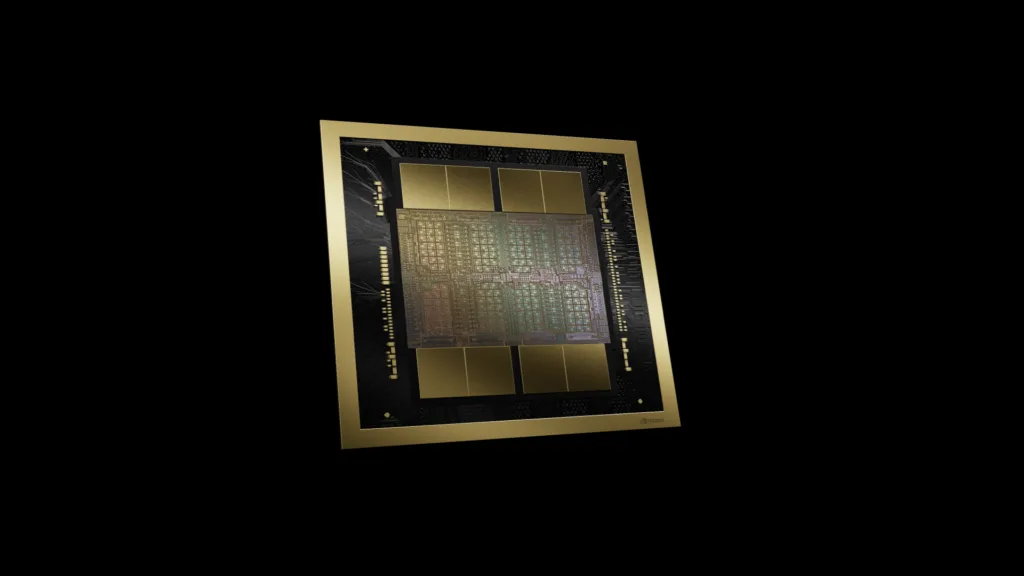

Nvidia unveils Blackwell superchip to create the world’s fastest AI platform

Nvidia has unveiled its latest AI-focused platform, based around the Blackwell “superchip”, and it’s set to be the world’s most powerful AI system by a factor of 30x.

According to Nvidia, the new Blackwell processor platform runs real-time generative AI on trillion-parameter large language models. It claims the GB200 NVL72 is 30 times faster than Nvidia’s current top offering, and the company says it pulls off next-generation AI computing at a fraction of the current cost and energy consumption.

Nvidia packs 208 billion transistors onto each Blackwell GPU, compared to 80 billion in its current Hopper chips. The first Blackwell chip is the B200 GPU, which can handle 20 petaflops of the new AI-focused FP4 data type.

The chips will be combined into server racks to run entire data centres, thanks to a new network switch system that connects up to 576 Blackwell processors together.

According to Nvidia Chief executive Jensen Huang, this processing power could be yours for between $30,000 and $40,000 per chip.

Customers line up for Nvidia Blackwell chips

Nvidia says Amazon, Google, Microsoft, Meta, Dell, Tesla, Oracle and OpenAI will be among the companies using racks of the new chips to power their cloud computing and AI. In its press release, the CEOs of Big Tech were lining up to sing their praise.

“We’re looking forward to using Nvidia’s Blackwell to help train our open-source Llama models and build the next generation of Meta AI and consumer products,” said Mark Zuckerberg, Founder and CEO of Meta/Facebook.

“By bringing the GB200 Grace Blackwell processor to our data centres globally,” Satya Nadella, CEO of Microsoft chipped in, “….we make the promise of AI real for organisations everywhere.”

Nvidia even squeezed in a quote from Elon Musk. “There is currently nothing better than Nvidia hardware for AI.”

Nvidia currently has an 80% market share, leaving the rest for rivals such as AMD, but the AI boom is driving demand for faster chips across the industry.

Related: Move over Nvidia: AMD unveils Instinct MI300 AI accelerators

Nvidia Blackwell: history and future

Named after pioneering mathematician David Harold Blackwell, Nvidia unveiled the new architecture at its GTC conference. It also revealed new ‘microservices’ that make it easier for companies to deploy artificial intelligence.

This Nvidia Inference Microservices (NIM) software bundles inference engines, APIs and AI support models into ready-made containers into which companies can plug their own data.

“Established enterprise platforms are sitting on a goldmine of data that can be transformed into generative AI copilots,” explained Jensen Huang.

“Created with our partner ecosystem, these containerised AI microservices are the building blocks for enterprises in every industry to become AI companies.”

Worth a read

- Want to use AI in business? Then think mundane tasks not revolution

- Australian telco Optus first to roll out 900MHz 5G

- Troubled WANdisco rebrands as Cirata

- Francesco Graziano, Enterprise Strategy Leader at BICS: “If you’re thinking about AI integration, you have to put on your client-centric hat”

NEXT UP

Slow buyers cause tech firms to rethink sales approaches as tough Q1 hits home

New research suggests tech sales were slow in Q1, with buyers of technology and professional services taking their time before committing to any solutions.

ByteDance says it has no plans to sell TikTok and refuses to bow to US pressure

ByteDance, the Chinese company that owns TikTok, stated that it “doesn’t have any plans to sell TikTok” on Toutiao, a social media platform that it also happens to own.

Solace Kidisil, Group COO of Nsano: “The difference between traditional finance and fintech is the questions we ask”

We interview Solace Kidisil, Group COO of Nsano, a fintech company from Ghana, offering digital payment solutions across Africa