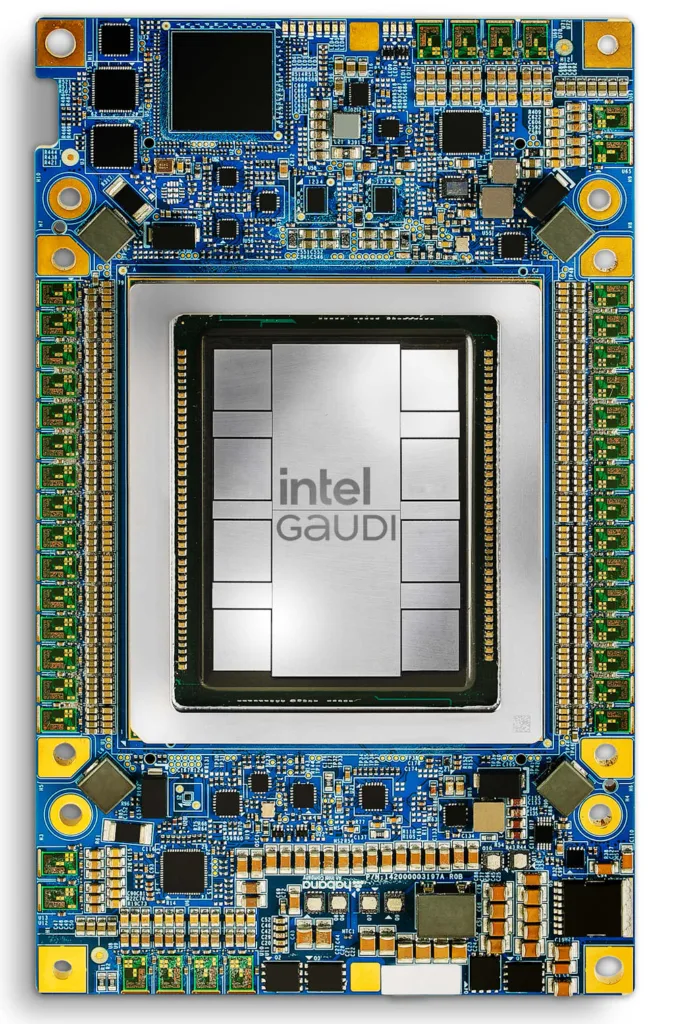

Intel claims Gaudi 3 AI accelerator is typically 40% faster than Nvidia H100

As part of its Intel Vision 2024 event, Intel has revealed performance details for its Gaudi 3 AI accelerator. It claims that its silicon is four times faster than Nvidia’s dominant H100 AI accelerator in one task, with typical speed improvements of 1.4x (or 40%).

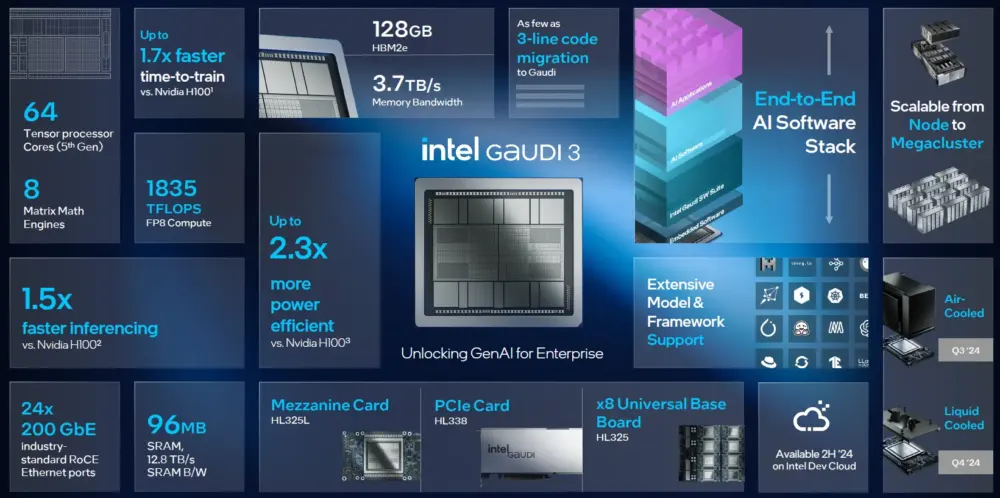

Gaudi 3 is built on 5nm architecture compared to the 7nm of the Gaudi 2 AI accelerator, and Intel claims it blasts its predecessor out of the AI water. In particular, it’s twice as powerful in AI compute tasks with FP8 precision (an 8-bit floating-point standard) and 4x more powerful with BF16 precision (a 16-point standard).

Gaudi 3 also brings twice as much network bandwidth and a 50% increase in memory bandwidth than Gaudi 2.

“All these together provide a significant leap in performance and productivity for AI training and inference on many of the popular large language models and multimodal models,” said Das Kamhout, Vice President & Senior Principal Engineer in the Intel Data Center and AI Group, at a pre-briefing event.

“Overall, we firmly believe Intel Gaudi 3 brings choices to enterprises when they’re weighing considerations such as viability, performance, cost and energy efficiency,” he added.

Related: Nvidia unveils Blackwell superchip to create the world’s fastest AI platform

Form factors and availability

Intel said Gaudi 3 will be available to OEMs – including its launch partners Dell, HPE, Lenovo and Supermicro – in the second quarter of 2024.

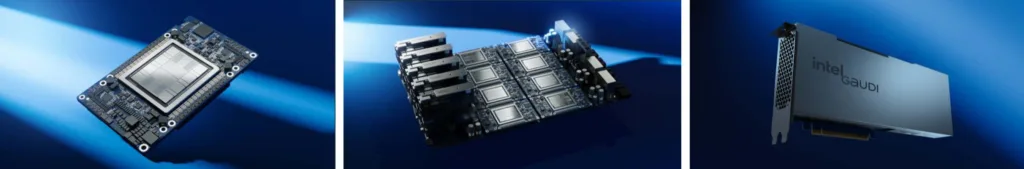

They will have a choice of three form factors: an accelerator card, a universal baseboard and a PCI-Express add-in card.

Intel Gaudi 3 vs Nvidia H100

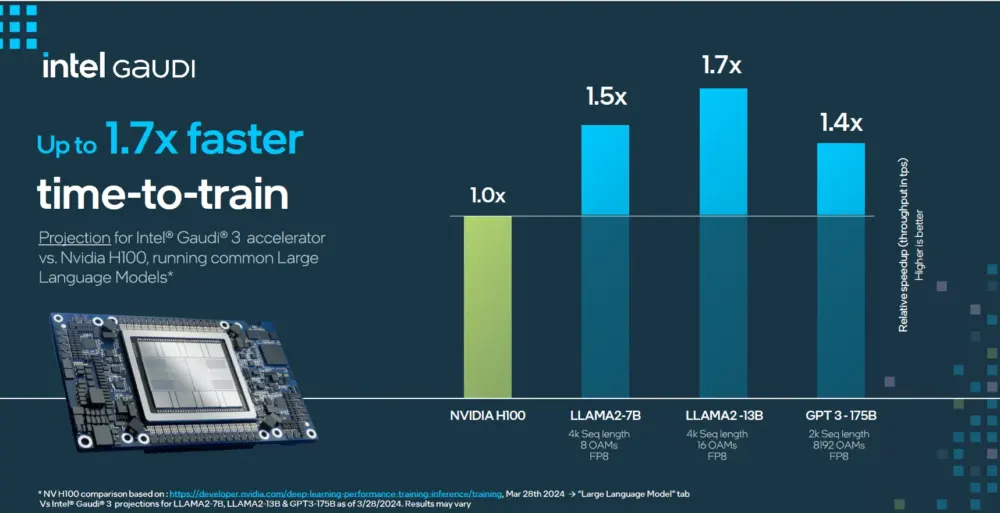

Intel also made beefy comparison claims for Gaudi 3 versus Nvidia’s H100 AI accelerator. In particular, that it would deliver 50% better inference and 40% better power efficiency.

The caveat is that these were projected claims and on specific models. Intel’s 50% faster inference (time to train) claim was based on the Llama 2 models with 7 billion and 13 billion parameters, and the GPT-3 175 billion parameter model.

“I also want to state how easy the migration path is to Gaudi,” said Kamhout, pointing out that much development is done at a high abstraction level. “You only need to input three to five lines of code, or sometimes hardly any, with PyTorch framework and community models from Hugging Face and MosaicML.”

Intel Gaudi 3 vs Nvidia H200

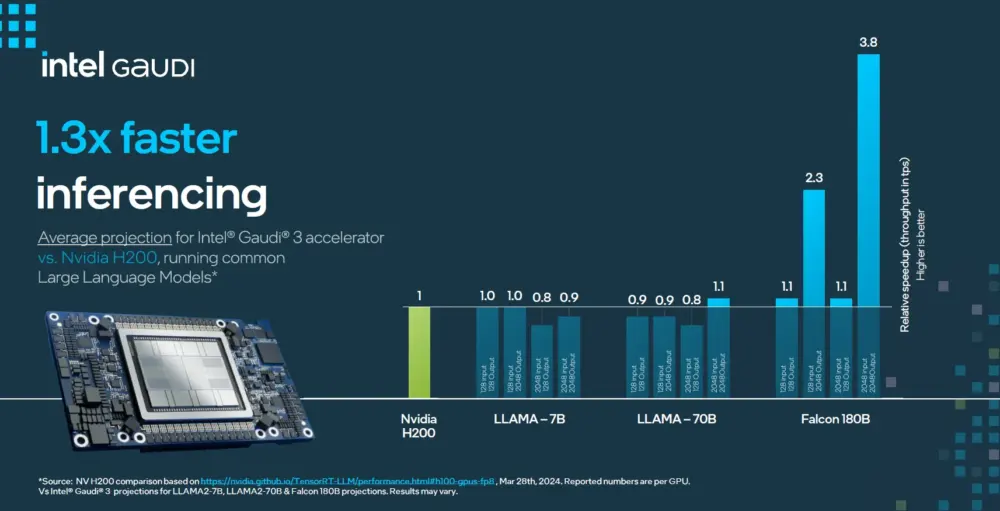

The pre-brief also featured two slides that compared Gaudi 3’s performance against Nvidia’s H200 AI accelerator, which is also expected to launch in Q2 2024.

In what we must assume to be carefully picked comparisons, it showed the two AI accelerators’ performance side by side in Llama 2 with 7 billion and 70 billion parameters, plus the Falcon LLM with 180 billion parameters.

But note these are projections, not absolute results.

In general, Gaudi 3 was on par with the H200 in the Llama performance projections but scored two heavy victories in the Falcon inferencing tests. Here, Intel claims it was up to 3.8x as fast.

We suspect that Nvidia could produce some equally compelling benchmark results if it chose to.

Related: How will Intel’s AI PC help you work smarter?

Industry backing for Intel Gaudi 3

It also comes with some big-name backing, including this statement from stability.ai: “Alternatives like Intel Gaudi accelerators give us better price performance, reduced lead time and ease of use with our models taking less than a day to port over.”

With software already available on the Intel Developer Cloud, it promises developers that they can build their software for Gaudi 2 today and then “migrate it seamlessly” to Gaudi 3 on its release.

NEXT UP

James McQuiggan, Security Awareness Advocate at KnowBe4: “Ironically, attack methods have remained unchanged over the past twenty years”

In this interview, we hear from James McQuiggan, Security Awareness Advocate at KnowBe4 and a part-time Faculty Professor at Valencia College in Florida.

What is ocean-bound plastic and should you care?

Lee Grant digs behind the truth about ocean-bound plastics to explain why reducing them is a worthy cause – but that we need to treat marketing claims with due scepticism

Slow buyers cause tech firms to rethink sales approaches as tough Q1 hits home

New research suggests tech sales were slow in Q1, with buyers of technology and professional services taking their time before committing to any solutions.