Meta releases dataset to maximise inclusivity and diversity

To help AI researchers make their tools and processes more inclusive, Meta has released a massive, diverse dataset of face-to-face video clips.

Casual Conversations v2 includes a broad range of diverse individuals, and will help developers assess how well their models work for different demographic groups.

Meta’s VP of civil rights, Roy Austin Jr, said current large language models “lack diversity”. He argues that “the only way to test is to have a diverse model, to have those voices that may not be in the larger models and to be intentional about including them”.

Why it matters

For AI to serve communities fairly, researchers need diverse and inclusive datasets to thoughtfully evaluate fairness in the models they build.

Gathering data that assesses how well a model works for different demographic groups is difficult. That’s due to complex geographic and cultural contexts, inconsistency between different sources and challenges with accuracy in labelling.

With this new publicly available resource, researchers can better evaluate the fairness and robustness of their AI models.

This dataset is designed to maximise inclusion by giving AI researchers more samples of people from a wide range of backgrounds.

How was the database created?

The v2 database was informed and shaped by a comprehensive literature review around relevant demographic categories.

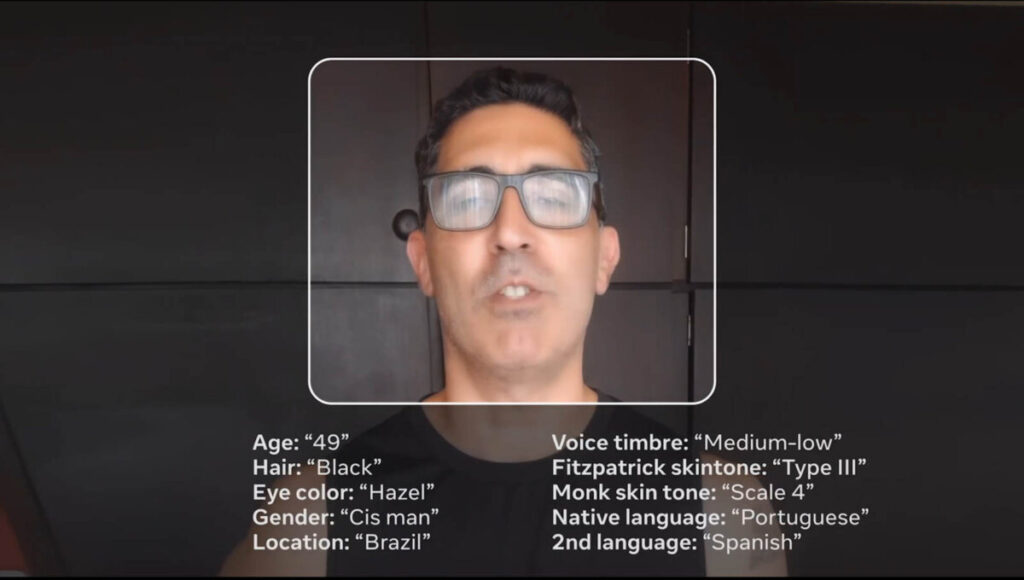

The dataset includes more than 25,000 videos from more than 5,000 people across seven countries. Rather than rely on algorithms, people self-identify their age, gender, race and other characteristics such as disability and physical adornments.

Trained experts then added additional metadata, including voice and skin tones.

The videos, featuring paid participants who gave their consent to be in the dataset, included both scripted and unscripted monologues. Participants were also given the chance to speak in both their primary and secondary languages.

Diverse improvements

Unlike Meta’s earlier dataset, which included few categories and only US participants, v2 offers a more granular list of 11 self-provided and annotated categories.

The self-provided categories include age, gender, language/dialect, geolocation, disability, physical adornments and physical attributes.

To further measure algorithmic fairness and robustness in these AI systems, v2 expanded their geographics to seven countries. Namely Brazil, India, Indonesia, Mexico, Vietnam, Philippines and the United States.

The new dataset will help AI developers address concerns around language barriers and physical diversity, which has been problematic in some AI contexts.

NEXT UP

Ryan Beal, CEO & Co-Founder of SentientSports: “Sports generate some of the richest datasets globally”

We interview Ryan Beal, CEO & Co-Founder of SentientSports, a startup using AI in fan engagement and athlete protection on social media.

Paris 2024: The greenest games ever

How the Paris 2024 Olympic Gamers organisers have lived up to their promise that this is the greenest Olympics ever

Salesforce, Workday team up to launch AI employee service agent

Salesforce and Workday have marked their new strategic partnership with the launch of an AI-powered assistant to handle employee queries.