Google joins C2PA to improve AI transparency and defences against deepfakes

Google has joined forces with other tech giants to help consumers tell apart machine-generated and human-created content.

Last week the company signed up to join the steering committee of the Coalition for Content Provenance and Authenticity (C2PA), whose members already include the likes of Microsoft, Intel, Adobe, Sony and the BBC. The coalition describes itself as a “global standards body advancing transparency online through certifying the provenance of digital content”.

As generative AI tools like Midjourney and DALLE creep ever closer to creating content that’s indistinguishable from humans’ work, the C2PA is pushing a standard called Content Credentials.

This standard enables creators to add metadata verifying how and when it was created or changed to their content. The aim is to give consumers more trust in the history and provenance of the material they consume. C2PA said Content Credentials is “tamper proof”.

Google will be using Content Credentials alongside its own AI identification efforts, including DeepMind’s SynthID, Google Search’s “About this Image” and YouTube’s content labels.

Flagging bad actors

As well as aiding consumers in identifying content made by AI, the standard could also help highlight material from human bad actors. Think scammers promising wealth-generating strategies direct from the likes of Elon Musk, or politically motivated groups spreading misinformation, or deepfakers looking to mimic public figures.

They all have one thing in common: pumping out content and pretending it’s from a legitimate source.

C2PA is hoping the standard will be picked up by the creative industries, professional and amateur producers of news content, social media platforms and content distributors. If so, it should both help consumers work out if the digital content they’re seeing is AI-generated whether the content remains as its creator intended. In particular, whether it has been manipulated in some way – either by people or AIs.

OpenAI recently announced that it will be adding C2PA metadata to images created with either its ChatGPT or DALLE 3. However, the organisation noted that challenges remain around identifying AI-made content.

“Metadata like C2PA is not a silver bullet to address issues of provenance,” reads an OpenAI statement about C2PA in DALLE 3. “It can easily be removed either accidentally or intentionally. For example, most social media platforms today remove metadata from uploaded images, and actions like taking a screenshot can also remove it. Therefore, an image lacking this metadata may or may not have been generated with ChatGPT or our API.”

Recommended reading: AI copyright: should your business be worried?

Meta’s AI label

The C2PA is not the only organisation aiming to make it easier to spot digital content made by AI.

Earlier this month, Meta announced that it will start adding labels to content posted on Facebook, Instagram and Threads that are thought to be AI-created. The labels will go live this year. Meta already labels its own AI-generated photorealistic images in a number of ways, including through watermarks and metadata attached to the image.

The company added that it would be teaming up with other “industry partners” around common technical standards that will identify content made by AIs.

Related reading: What is Nightshade and poison pixels?

More Google coverage

NEXT UP

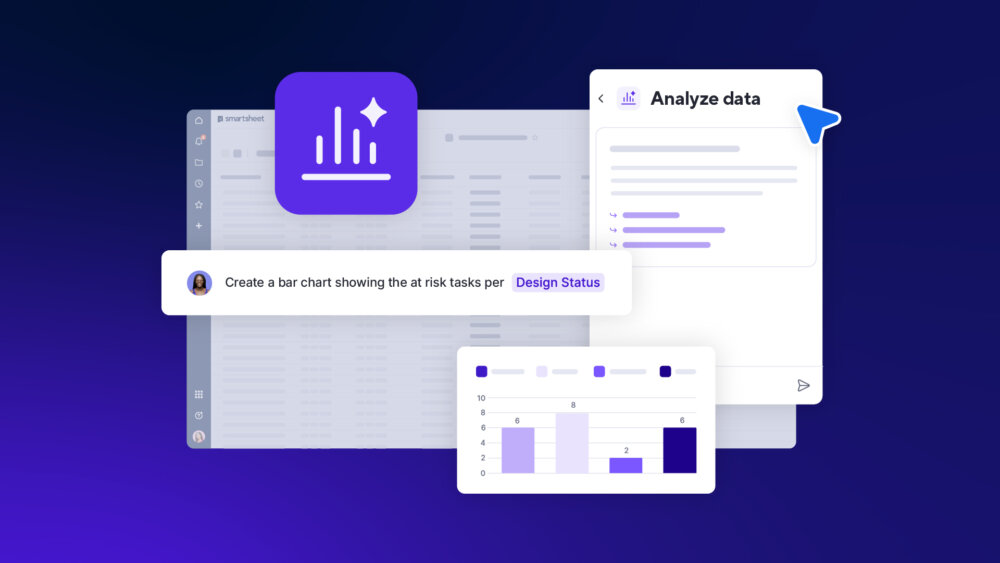

Smartsheet brings secure AI to the enterprise

Smartsheet has added AI tools that it promises will “remove complexity and uncover actionable insights” based on companies’ own data

Andrew Doyle, CEO of NorthRow: “AI and big data are transforming how financial institutions operate”

We interview Andrew Doyle, CEO of NorthRow, an anti-money laundering compliance software provider, to find out how blockchain, AI and cryptocurrency are affecting this crucial sector.

Meta’s Llama LLM makes impact on medicine, education and business

Meta has lifted the lid on how businesses, educators and researchers are putting its large language model, Llama, to work.