Bias in AI: what 200 images of people at work reveal

We used Midjourney to generate more than 200 images of people at work. The images were bold and sometimes beautiful but, as Nicole Kobie explains, they also highlight problems that continue to plague generative AI

Picture the following professions in your mind: customer service rep, chef, accountant, judge, consultant.

Perhaps you imagined the accoutrements that go with each role. A phone headset at a laptop, knives in a kitchen, spreadsheet after spreadsheet, a white wig in a courtroom, and, well, whatever it is consultants need for what they do. But perhaps you also pictured people in those jobs — and that could reveal something about your own biases or assumptions.

We asked the AI image generator Midjourney to do the same: create 200 images based on a brief job description. Midjourney is one of many image generators that have popped up online during the current AI boom, alongside OpenAI’s DALL-E and Stability AI’s Stable Diffusion.

The results leave much to be desired. In Midjourney’s world, customer service reps are indeed wearing a phone headset, but they’re all women — and not only young, slim and pretty, but clearly ecstatic to be taking calls. A chef is a white man with facial hair; cops are rugged men; developers are white men in glasses, while engineers are slightly more rugged white men in glasses; accountants (male or female) have tousled hair and thick black glasses; judges are old men with white hair, as are vice presidents.

That likely comes as no surprise given the many examples of bias in the output of generative AI, be it text-based OpenAI’s ChatGPT or image systems such as Midjourney.

In one instance, images generated of Barbies from countries around the world produced a blonde Thai doll. In another test, prompts asking for people, places and food from different countries showed reductive stereotypes of the wider world — all people from India are old men, Mexicans always wear sombreros — while another report revealed higher-paying jobs tend to be pictured using people with lighter skin tones.

The results we saw weren’t just problematic with race and gender. All women created by the system were young and pretty, and most people were slim — the only larger-framed individuals were older men.

It’s not just about looks or demographics. Plenty of the results included anachronisms and had other flaws that reduced their accuracy, including picturing animals instead of people. A chief security officer is a rugged man in military garb — though one was pictured as a lion in uniform. Cyber security engineers were more diverse — a young man, two of an older bearded man, and a young Asian woman — but all were pictured in the blue light of multiple displays like cheesy hacker films from the 1990s. Accountants didn’t use laptops but had stacks of paper. Plumbers wear red hats and sport thick moustaches, a la Mario.

And Midjourney clearly understands even less about what consultants do than the rest of us. While any person in a suit or even office casual would fit the brief, Midjourney instead supplied images of magician-like outfits, complete with top hats in dramatic steam-punk settings. Inexplicably, one of the four consultant images was of a lizard.

Why does it matter? Because the results could cause problems in the real world, where such systems are already being used.

How generative AI works — and doesn’t

To start, why are we getting these shonky results?

There are two core problems with the current crop of generative AI tools: first, the datasets are limited and biased; second, the models themselves are inherently flawed.

Image generation systems like Midjourney are trained differently than OpenAI’s text-generating chatbot. “Although Midjourney is opaque about the exact way its algorithms work, most AI image generators use a process called diffusion,” says Dr TJ Thomson, senior lecturer at RMIT University. “Diffusion models work by adding random ‘noise’ to training data and then learning to recover the data by removing this noise. The model repeats this process until it has an image that matches the prompt.”

He adds: “This is different to the large language models that underpin other AI tools such as ChatGPT. Large language models are trained on unlabelled text data, which they analyse to learn language patterns and produce human-like responses to prompts.”

Either way, large datasets are required, and that means hoovering up whatever can be found online. We don’t know what datasets these models are trained on — MidJourney’s CEO David Holz has admitted the dataset was from “a big scrape of the internet”, in particular open datasets — as the development companies aren’t usually transparent.

But we can tell a lot by the output. It’s clear that the collections of images used for training not only reflect but exacerbate our own biases, showing professional jobs as the preserve of white men more often than not.

“The training data is simply not representative enough of the diversity of the world,” says Dr Mike Katell, Ethics Fellow in the Turing’s Public Policy Programme and a Visiting Senior Lecturer at the Digital Environment Research Institute (DERI) at Queen Mary University of London. “There’s a problem of where the datasets come from — if they are being generated in the place where the most activity in creating artificial intelligence is happening, North America and Europe in particular, then the datasets are likely going to reflect those populations.”

After all, he notes, a pilot wouldn’t be a white male in most places in the world where local demographics differ, yet that’s the default that’s likely to get pictured if you ask these AI generators.

Of course, sometimes what we might see as bias is actually reality — just not a reality we want to encourage. That’s especially true when decades of older images are included in datasets. “Despite there being many women or people of colour entering these professions now, their numbers still haven’t caught up in any significant way,” says Katell. “So it’s hard to teach AI to break free of historical patterns.”

He adds that AI isn’t aspirational, though we may well be. “We might be more interested in portraying the ideals than the hard facts… Every day we have conversations about looking at how the world is trying to project forward how we want it to be. But AI only reproduces at best the present but more likely the past. We need creativity that AI simply doesn’t have and it’s probably, at least in its current state, incapable of having.”

AI not only lacks creativity, but flexibility. “AI models are inherently brittle,” Katell says. “Once we create them and set the weights for them and how they should portray the world, it’s hard to modify those areas.”

He adds that’s part of the challenge with making a model that works for everyone, rather than a specific tool designed for narrow use, where context could be embedded into the system. Instead, these systems are designed to be broadly applicable, which is a strength in some ways but a weakness in others.

Can bias in AI be fixed?

There are some solutions, but don’t expect a panacea for this problem. Users can learn a system’s flaws, and carefully write prompts to avoid them. Developers can improve datasets to be more representative, and they can improve the models themselves.

Let’s start with prompt engineering. Not getting enough diversity in your Midjourney images? Simply ask for more by including the characteristics you’d like in your prompt. Sound easy? It doesn’t always work.

With Katell’s earlier point about pilots around the world in mind, we asked Midjourney to generate an image of a pilot. Three were male, one was female, all were white — but all appeared to be flying 1950s-era aircraft. Asking Midjourney to create “a pilot operating in a country outside of North America or Europe” meant the model spat out images that were more modern in setting but remained white and male. Third time’s the charm: a prompt asking for “a pilot from a diverse background flying a modern plane” returned a set of images with three black men and one white man.

OpenAI’s DALL-E 2 didn’t have any better luck with our pilot query, returning three men from different eras of flight as well as one woman of colour. Asking for pilots outside of North America and the US still returned three white men and a black woman. Adding in that request for diversity still returned three white men and a woman of colour, this time Asian.

Figuring out the right query requires understanding the way a specific AI works as well as the context for the prompt — diversity means different things depending on industry or job role, for example. “A word like diversity means a lot of different things, depending on the setting,” Katell says. “We may say we want to see more diversity, but we’re actually saying is we want more black or brown faces, or non-male, or non-binary, or we want a rainbow of them all.”

Plus, requiring humans to input more specific prompts puts the onus on the user to understand all the different ways bias could come into play — and that’s a lot to expect from any one person. “Being more thoughtful with the prompts used to create content can also help but we’re all limited by what we don’t know and authentic and accurate representations are best co-created with diverse cross-sections of the community,” Thomson says.

Continuing to write prompt after prompt might eventually lead to an appropriate result. That not only requires thought but also uses a lot of time. Kattel points to stories about generative AI writing a book or creating art: “What’s really hidden in these stories is just how much work it really takes to get these systems to generate that — it’s not just three prompts and this miracle comes out of the system.”

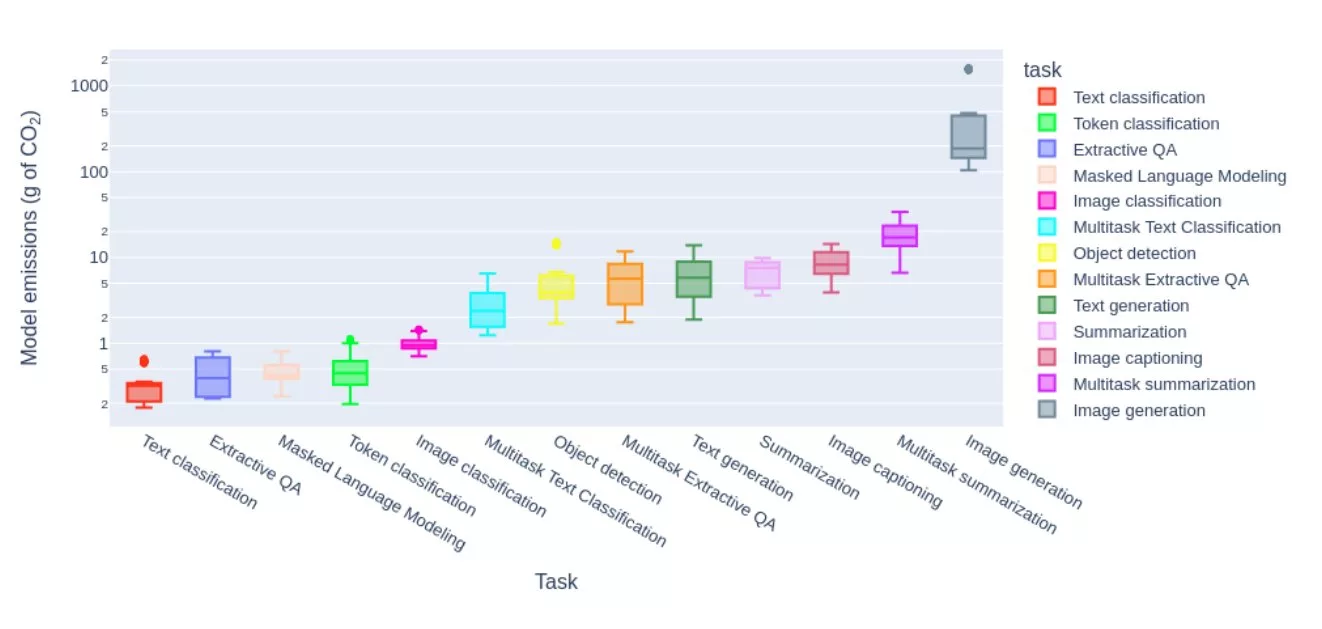

And, as Thomson notes, there are downsides beyond wasted time — in particular when it comes to energy use. “It’s important to also keep in mind the energy cost of generative AI,” he says. “A new study suggests that generating an image with a tool like Midjourney uses the equivalent energy of charging a cell phone.”

Another solution is to improve our training datasets, using images sourced from further afield and including more diverse examples. This is something the industry is starting to work on, with OpenAI hoping to encourage the creation of better training datasets. Then there’s the models themselves, with projects such as Hugging Face offering more transparency in the hopes of creating better AI.

“Some AI models have been developed with diversity and inclusivity in mind,” says Thomson. “An example is Latimer, nicknamed the ‘Black GPT,’ which has been trained with more diverse assets and aims to better reflect Black and brown people.”

AI developers are also slowly tweaking systems to improve results, hoping minor adjustments can fix the problem. But Katell doesn’t think bias can or should be engineered out of this type of AI. “We’re asking AI developers to make a lot of choices about our ideal social conditions,” he says. “How much representation should there be in a dataset… someone has to make that choice and it’s actually a very heavy decision to hand off to anybody, let alone a software developer.”

Plus, it requires constant updating in order to use up-to-date datasets and reflect our ever-changing ideals.

Inherent flaws

Of course, Midjourney continues to be worked on — given the results we had, that’s good news. After all, consultants aren’t lizards and not all executives are older white men. This isn’t a finished product, even if the resulting images produced by it and rivals are already being used in marketing and elsewhere. But the results highlight the flaws inherent in these systems, early days though it may be for generative AI.

And this sort of bias matters. First, it risks further cementing discrimination when these images are disseminated widely: if every image depicting tech roles shows white men, it makes it harder to imagine anyone else in that job, consciously or not. Secondly, these images could be fed back into AI training sets, further exacerbating the problem of white men being seen as the default for all job roles. If we use these systems to flood the web with even more biased images, and then train further systems on those images, we may find it impossible to escape the problems highlighted in our results.

Last, it shows the flaws in these systems that we’re rushing to adopt — perhaps bias should be seen as a sign that generative AI needs more work. Consider these biased images as the canary in the coal mine: if generative AI can’t get these simple image requests right, what else is going wrong with other tasks we give such systems? When Amazon trialled an AI HR bot it filtered all men into the “to be interviewed” pile and rejected all women — it wasn’t told to do this, but all previously successful applications had been male, so it copied our old habits. We can tell from image generators that these systems are biased, so we should reconsider letting AI trained in similar ways make serious decisions.

“I’m somewhat pessimistic that generative AI can really get it right, at least in the short term or how it’s currently designed,” adds Kattel. “I think we would need a pretty fundamental breakthrough… to see the systems demonstrate an awareness of context and creativity that resembles what humans are after.”

If you’d like a copy of the 200 images or have any questions related to this article, please reach out through our contact form.

This article has been tagged as a “Great Read”, which we reserve for truly outstanding pieces.

[1] Citation Credit: Luccioni, A. S., Jernite, Y., & Strubell, E. (2023). Power Hungry Processing: Watts Driving the Cost of AI Deployment? ArXiv. /abs/2311.16863

NEXT UP

Dear Lord, let this be the last World Password Day

Security expert Davey Winder explains why he wants this to be the last World Password Day ever and prays for World Passkey Day instead

Mark Allen, Head of Cybersecurity at CloudCoCo: “It’s alarming to witness the extent to which deepfakes can be weaponised”

Mark Allen, Head of Cybersecurity at CloudCoCo, provides what amounts to a step-by-step guide to keeping your business more secure against cyberattacks – including deepfakes

Inside Lenovo’s Budapest factory: making workstations more sustainable

Lenovo has just switched on 5,072 solar panels to make its Budapest factory more sustainable. We go behind the scenes